day 16 photo sticker app

camera sticker app

import cv2

import matplotlib.pyplot as plt

import numpy as np

print("🌫🛸")

🌫🛸

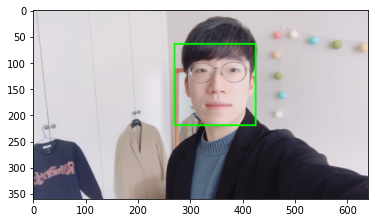

open image

import os

my_image_path = os.getenv('HOME')+'/aiffel/camera_sticker/images/image.png'

img_bgr = cv2.imread(my_image_path) #- OpenCV로 이미지를 읽어서

img_bgr = cv2.resize(img_bgr, (640, 360)) # 640x360의 크기로 Resize

img_show = img_bgr.copy() #- 출력용 이미지 별도 보관

plt.imshow(img_bgr)

plt.show()

# plt.imshow 이전에 RGB 이미지로 바꾸는 것을 잊지마세요.

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

plt.imshow(img_rgb)

plt.show()

face detection

We use object detection technology to find the facial location. We will be using a already trained open source package.

The face detector in dlib’s HOG(Histogram of Oriented Gradient) feature uses SVM(Support Vector Machine)’s sliding window to find the face.

Machine Learning is Fun! Part 4: Modern Face Recognition with Deep Learning

The reason why we use image gradient as the feature is because the close in proximity pixel’s color gradiation often gives more accurate expressions of the object than the pixel’s RGB value itself. If we analyze pixels directly, really dark images and really light images of the same person will have totally different pixel values. But by only considering the direction that brightness changes, both really dark images and really bright images will end up with the same exact representation. That makes the problem a lot easier to solve!

we’ll break up the image into small squares of 16x16 pixels each. In each square, we’ll count up how many gradients point in each major direction (how many point up, point up-right, point right, etc…). Then we’ll replace that square in the image with the arrow directions that were the strongest.

import dlib

detector_hog = dlib.get_frontal_face_detector() #- detector 선언

print("🌫🛸")

🌫🛸

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

dlib_rects = detector_hog(img_rgb, 1) #- (image, num of img pyramid)

print("🌫🛸")

🌫🛸

print(dlib_rects) # 찾은 얼굴영역 좌표

for dlib_rect in dlib_rects:

l = dlib_rect.left()

t = dlib_rect.top()

r = dlib_rect.right()

b = dlib_rect.bottom()

cv2.rectangle(img_show, (l,t), (r,b), (0,255,0), 2, lineType=cv2.LINE_AA)

img_show_rgb = cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB)

plt.imshow(img_show_rgb)

plt.show()

rectangles[[(270, 64) (425, 219)]]

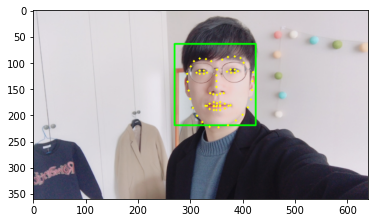

face landmark

To use facial recognition we must use face landmark localization technology.

The Object keypoint estimation algorithm

-

top-down: find the boudning box then estimate the keypoint within the box

-

bottom-up: find the keypoint in the image then cluster the keypoints to create a box

import os

model_path = os.getenv('HOME')+'/aiffel/camera_sticker/models/shape_predictor_68_face_landmarks.dat'

landmark_predictor = dlib.shape_predictor(model_path)

print("🌫🛸")

🌫🛸

list_landmarks = []

for dlib_rect in dlib_rects:

points = landmark_predictor(img_rgb, dlib_rect)

list_points = list(map(lambda p: (p.x, p.y), points.parts()))

list_landmarks.append(list_points)

print(dlib_rects)

rectangles[[(270, 64) (425, 219)]]

for landmark in list_landmarks:

for idx, point in enumerate(list_points):

cv2.circle(img_show, point, 2, (0, 255, 255), -1) # yellow

img_show_rgb = cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB)

plt.imshow(img_show_rgb)

plt.show()

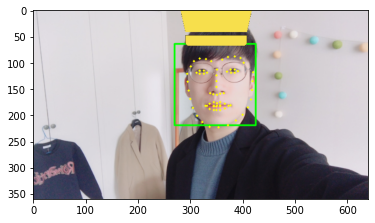

adding the sticker

for dlib_rect, landmark in zip(dlib_rects, list_landmarks):

print (landmark[30]) # nose center index : 30

x = landmark[30][0]

y = landmark[30][1] - dlib_rect.width()//2

w = dlib_rect.width()

h = dlib_rect.width()

print ('(x,y) : (%d,%d)'%(x,y))

print ('(w,h) : (%d,%d)'%(w,h))

(350, 146)

(x,y) : (350,68)

(w,h) : (156,156)

We read the crown image then resize it to (w,h)

import os

sticker_path = os.getenv('HOME')+'/aiffel/camera_sticker/images/king.png'

img_sticker = cv2.imread(sticker_path)

img_sticker = cv2.resize(img_sticker, (w,h))

print (img_sticker.shape)

(156, 156, 3)

refined_x = x - w // 2 # left

refined_y = y - h # top

print ('(x,y) : (%d,%d)'%(refined_x, refined_y))

(x,y) : (272,-88)

img_sticker = img_sticker[-refined_y:]

print (img_sticker.shape)

(68, 156, 3)

refined_y = 0

print ('(x,y) : (%d,%d)'%(refined_x, refined_y))

(x,y) : (272,0)

sticker_area = img_show[refined_y:img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]]

img_show[refined_y:img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]] = \

np.where(img_sticker==0,sticker_area,img_sticker).astype(np.uint8)

plt.imshow(cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB))

plt.show()

sticker_area = img_bgr[refined_y:img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]]

img_bgr[refined_y:img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]] = \

np.where(img_sticker==0,sticker_area,img_sticker).astype(np.uint8)

plt.imshow(cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB))

plt.show()

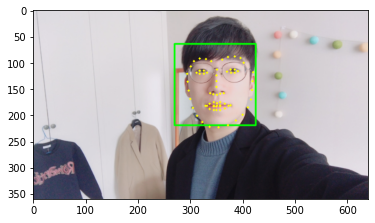

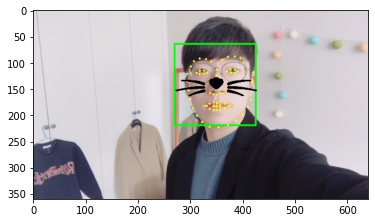

cat filter self project

preparing image

import cv2

import matplotlib.pyplot as plt

import numpy as np

import os

import dlib

import math

my_image_path = os.getenv('HOME')+'/aiffel/camera_sticker/images/image.png'

img_bgr = cv2.imread(my_image_path) #- OpenCV로 이미지를 읽어서

img_bgr = cv2.resize(img_bgr, (640, 360)) # 640x360의 크기로 Resize

img_show = img_bgr.copy() #- 출력용 이미지 별도 보관

img_show2 = img_bgr.copy() #- 출력용 이미지 별도 보관

img_show3 = img_bgr.copy() #- 출력용 이미지 별도 보관

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

plt.imshow(img_rgb)

detector_hog = dlib.get_frontal_face_detector() #- detector 선언

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

dlib_rects = detector_hog(img_rgb, 1) #- (image, num of img pyramid)

for dlib_rect in dlib_rects:

l = dlib_rect.left()

t = dlib_rect.top()

r = dlib_rect.right()

b = dlib_rect.bottom()

cv2.rectangle(img_show, (l,t), (r,b), (0,255,0), 2, lineType=cv2.LINE_AA)

img_show_rgb = cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB)

plt.imshow(img_show_rgb)

model_path = os.getenv('HOME')+'/aiffel/camera_sticker/models/shape_predictor_68_face_landmarks.dat'

landmark_predictor = dlib.shape_predictor(model_path)

list_landmarks = []

for dlib_rect in dlib_rects:

points = landmark_predictor(img_rgb, dlib_rect)

list_points = list(map(lambda p: (p.x, p.y), points.parts()))

list_landmarks.append(list_points)

for landmark in list_landmarks:

for idx, point in enumerate(list_points):

cv2.circle(img_show, point, 2, (0, 255, 255), -1) # yellow

img_show_rgb = cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB)

plt.imshow(img_show_rgb)

plt.show()

adding sticker

for dlib_rect, landmark in zip(dlib_rects, list_landmarks):

print (landmark[30]) # nose center index : 30

x = landmark[30][0]

y = landmark[30][1]

w = dlib_rect.width()

h = dlib_rect.width()

print ('(x,y) : (%d,%d)'%(x,y))

print ('(w,h) : (%d,%d)'%(w,h))

(350, 146)

(x,y) : (350,146)

(w,h) : (156,156)

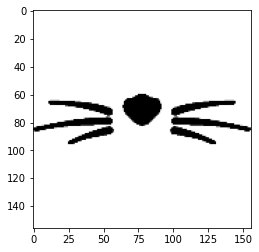

sticker_path = os.getenv('HOME')+'/aiffel/camera_sticker/images/cat-whiskers.png'

img_sticker = cv2.imread(sticker_path)

img_sticker = cv2.resize(img_sticker, (w, h))

img_sticker_rgb = cv2.cvtColor(img_sticker, cv2.COLOR_BGR2RGB)

plt.imshow(img_sticker_rgb)

plt.show

print (img_sticker.shape)

(156, 156, 3)

refined_x = x - w // 2 # left

refined_y = y - h // 2 # top

print ('(x,y) : (%d,%d)'%(refined_x, refined_y))

(x,y) : (272,68)

if refined_y < 0:

img_sticker = img_sticker[-refined_y:]

refined_y = 0

print (img_sticker.shape)

print ('(x,y) : (%d,%d)'%(refined_x, refined_y))

(156, 156, 3)

(x,y) : (272,68)

sticker_area = img_show[refined_y:refined_y+img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]]

img_show[refined_y:refined_y+img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]] = \

np.where(img_sticker==255,sticker_area,img_sticker).astype(np.uint8)

plt.imshow(cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB))

plt.show()

sticker_area = img_show2[refined_y:refined_y+img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]]

img_show2[refined_y:refined_y+img_sticker.shape[0], refined_x:refined_x+img_sticker.shape[1]] = \

cv2.addWeighted(sticker_area, 0.5, np.where(img_sticker==255,sticker_area,img_sticker).astype(np.uint8), 0.5, 0)

plt.imshow(cv2.cvtColor(img_show2, cv2.COLOR_BGR2RGB))

plt.show()

Leave a comment